👗 what tech policy can learn from textiles

🪅 A LOT

Stop me if you’ve heard this before (*you have) - but a lot of modest, but meaningful interventions that count as ‘tech policy’ don’t actually have to be all that ~fancy.~ They also don’t always need to be new. I am a fan of shopping our regulatory closet and asking whether we can use or amend tools that we already have - or at least take some inspiration from them. Like, wide leg cargo pants are back, have you noticed that? Gift from the gods.

Lately I have been thinking that the Textile Labelling Act has a lot of useful little clues (or cues?) for people that care about tech policy (broadly) - or the Canada Consumer Product Safety Act, which has a bunch of relevant regs under it, like:

There’s a simplicity to the Textile Labelling Act (1985) that I adore - you just have to label any textile fibre, yarn or fabric before it’s sold in Canada to consumers. There’s also the Consumer Packaging and Labelling Act (also 1985), which states that if you sell a prepackaged non-food product in Canada, it has to be labelled with accurate and meaningful information. Also: ANY prepackaged, non-food product - that’s a lot of stuff.

Synthetic media also needs to be labelled with accurate and meaningful information.

Why lean on something as simple labels? Because they are where we could start to solve for the murkiness of online life. We all need and deserve basic disclosure. Privacy policy tries to help unlock this for us; shifting to an explicit consent model and/or making terms and conditions more digestible and less dense. But privacy policy winds up being a bit of a gate of sorts - once we ‘accept,’ we don’t tend to look back or re-evaluate that decision.

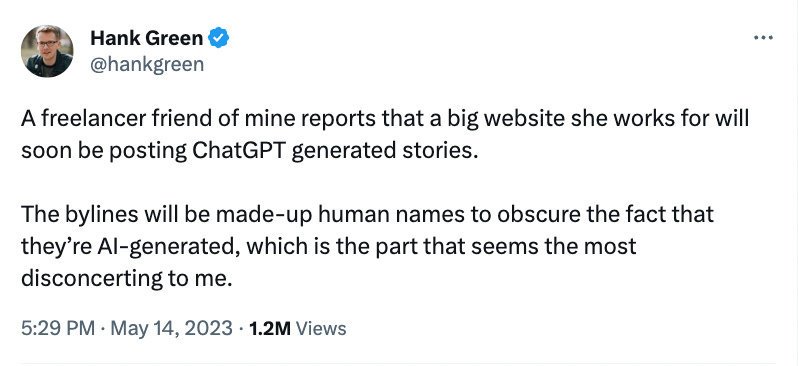

Synthetic media - like text, images, or music produced algorithmically through large language models (LLMs) - have a slightly different directional angle. Currently, such content tends to be presented to us in a disguise of sorts - attempting to ‘pass’ for material made by a human. For instance, chatbots are often designed to seem as if a person is engaging with you, not a program (this bothers me SO MUCH, omg). And yet falsely claiming to be a human through online interaction could/should be seen as a manifestation of false and misleading advertising under the Competition Act. Chatbots should be clearly labelled, too. They should not be able to masquerade as humans.

Synthetic media is super similar. If we are to consider consuming stuff conjured by artificial intelligence, we should be able to know what made it (i.e. model or human) and if it is a model, then why not understand the inputs to that model, too? That’s step 2.

Consumers can be powerful here in helping to resist/revolt against (lazily deployed but technically sophisticated) synthetic media because they can vote with their feet (or wallets, depending on the cliche you prefer). In order to do that, we need to know what is what. Right now, the landscape is much too murky and that’s uncool.

Back to not being “too fancy.” Like, I’m into basic things and fully embracing cringe core right now as a new parent (denim on denim, goofy running shoes, fanny pack with diapers in it, etc.) and I am doubling down on that consumer protection lens for the digital economy as part of that persona (it’s called being ALL IN). IF we start with that perspective, I think it naturally follows that people need to know how something was made, and what the inputs were. I also think it would be difficult to take firms that oppose such disclosure seriously. Like, if you want to use AI to make a song, go for it - but it shouldn’t be permissible to pass that off to the public alongside the music we are used to.

🏷️ We need labels in other places, too.

I (still, always, forever) think that we could label private label products with the name of the parent company so that shoppers understand when competition is a smokescreen. This would be a simple intervention to help unmask corporate consolidation through awareness. We should also label when self-preferencing is occurring in search (with the ability to switch away from such sorting to alternate models) and we need to label personalised pricing with an explanation of the factors that have or are influencing a quote.

The first step to reliably achieving more autonomy in these MURKY marketplaces is getting beyond the permissive yes/no binary and towards more consistent transparency. Labels help to address that unknowability by giving people basic information instead of hoping for some kind of digital-literacy-by-osmosis to happen.

Canada recently announced a collaboration across the Competition Bureau, CRTC, and Privacy Commissioner called the Canadian Digital Regulators Forum to “keep pace with rapid changes in the digital economy.” There’s also the new Parliamentary Caucus on Emerging Technology (PCET). I think that labels could be part of this renewed agenda to better protect Canadian consumers. 😘

I realized that this post echoes a Maclean’s article from the President of MILA (!) and I love that. I come to labels from the policy precedent we have, as well as a ‘misleading’ lens. She comes to it because labels can “force a certain amount of AI literacy on the average person.” Labels - so hot right now.

I think that labels are a good idea. The tricky bit is enforcement. What if the website with AI-generated stories is a platform website which has an API for uploading stories and a third party uploads the AI-generated stories with the fake bylines? What if the uploader is not located in Canada?

What about a warning label on a credit card?